-

Afrikaans

Afrikaans -

Albanian

Albanian -

Amharic

Amharic -

Arabic

Arabic -

Armenian

Armenian -

Azerbaijani

Azerbaijani -

Basque

Basque -

Belarusian

Belarusian -

Bengali

Bengali -

Bosnian

Bosnian -

Bulgarian

Bulgarian -

Catalan

Catalan -

Cebuano

Cebuano -

China

China -

Corsican

Corsican -

Croatian

Croatian -

Czech

Czech -

Danish

Danish -

Dutch

Dutch -

English

English -

Esperanto

Esperanto -

Estonian

Estonian -

Finnish

Finnish -

French

French -

Frisian

Frisian -

Galician

Galician -

Georgian

Georgian -

German

German -

Greek

Greek -

Gujarati

Gujarati -

Haitian Creole

Haitian Creole -

hausa

hausa -

hawaiian

hawaiian -

Hebrew

Hebrew -

Hindi

Hindi -

Miao

Miao -

Hungarian

Hungarian -

Icelandic

Icelandic -

igbo

igbo -

Indonesian

Indonesian -

irish

irish -

Italian

Italian -

Japanese

Japanese -

Javanese

Javanese -

Kannada

Kannada -

kazakh

kazakh -

Khmer

Khmer -

Rwandese

Rwandese -

Korean

Korean -

Kurdish

Kurdish -

Kyrgyz

Kyrgyz -

Lao

Lao -

Latin

Latin -

Latvian

Latvian -

Lithuanian

Lithuanian -

Luxembourgish

Luxembourgish -

Macedonian

Macedonian -

Malgashi

Malgashi -

Malay

Malay -

Malayalam

Malayalam -

Maltese

Maltese -

Maori

Maori -

Marathi

Marathi -

Mongolian

Mongolian -

Myanmar

Myanmar -

Nepali

Nepali -

Norwegian

Norwegian -

Norwegian

Norwegian -

Occitan

Occitan -

Pashto

Pashto -

Persian

Persian -

Polish

Polish -

Portuguese

Portuguese -

Punjabi

Punjabi -

Romanian

Romanian -

Russian

Russian -

Samoan

Samoan -

Scottish Gaelic

Scottish Gaelic -

Serbian

Serbian -

Sesotho

Sesotho -

Shona

Shona -

Sindhi

Sindhi -

Sinhala

Sinhala -

Slovak

Slovak -

Slovenian

Slovenian -

Somali

Somali -

Spanish

Spanish -

Sundanese

Sundanese -

Swahili

Swahili -

Swedish

Swedish -

Tagalog

Tagalog -

Tajik

Tajik -

Tamil

Tamil -

Tatar

Tatar -

Telugu

Telugu -

Thai

Thai -

Turkish

Turkish -

Turkmen

Turkmen -

Ukrainian

Ukrainian -

Urdu

Urdu -

Uighur

Uighur -

Uzbek

Uzbek -

Vietnamese

Vietnamese -

Welsh

Welsh -

Bantu

Bantu -

Yiddish

Yiddish -

Yoruba

Yoruba -

Zulu

Zulu

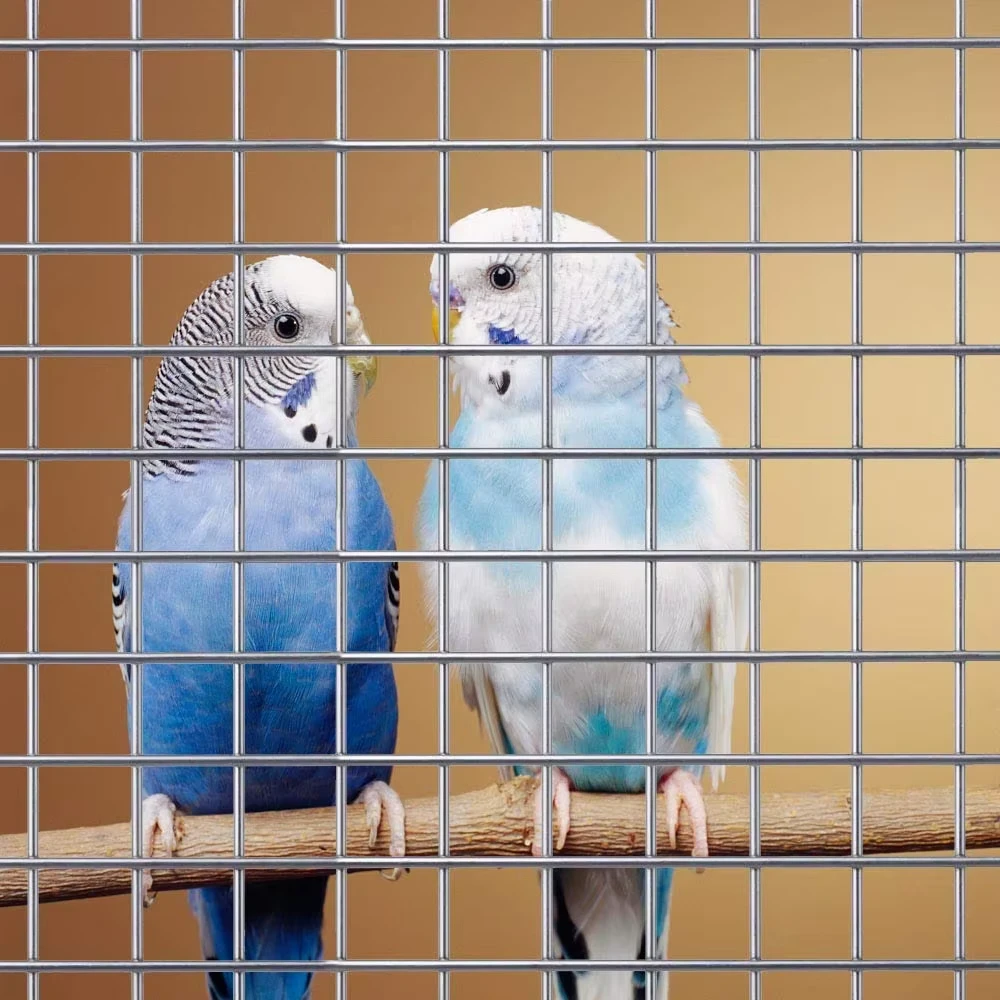

bird net

BirdNet Revolutionizing Avian Research and Conservation through Technology

In an age where technology permeates every aspect of our lives, the field of ornithology is no exception. BirdNet, a pioneering project, has emerged as a game-changer in bird identification and conservation. Utilizing advanced machine learning and artificial intelligence, BirdNet not only aids researchers and birdwatchers in identifying bird species but also contributes significantly to avian research and conservation efforts worldwide.

BirdNet was developed by a team of researchers and computer scientists at the Cornell Lab of Ornithology, who aimed to create a user-friendly app that could identify birds from their songs and calls. This initiative tapped into the growing interest in citizen science, encouraging bird enthusiasts of all experience levels to participate in data collection and conservation efforts. The app leverages sophisticated algorithms that analyze audio recordings, allowing users to determine which bird species are present in their vicinity simply by recording their sounds. This innovative approach enables people to engage with nature actively, fostering a deeper connection to the environment.

.

But BirdNet is not just a tool for individual birdwatchers; it plays a crucial role in scientific research and conservation initiatives. By gathering audio data from a wide range of locations, the app facilitates large-scale monitoring of bird populations. This aspect of the project aligns with global conservation efforts, as it provides vital information about species distribution, migration patterns, and population dynamics. The data collected can inform conservationists about species that are declining or at risk, prompting timely action to protect their habitats.

bird net

Furthermore, BirdNet exemplifies the power of crowdsourcing in science. As more people use the app and contribute their recordings, the dataset continues to grow, enhancing the model's prediction capabilities. This collaboration between scientists and citizen scientists creates a dynamic feedback loop, enabling real-time data collection that can adapt to changing environmental conditions. The involvement of amateur birdwatchers diversifies the geographic range of data collection, ensuring a more comprehensive understanding of avian populations across different ecosystems.

The impact of BirdNet extends beyond individual and scientific applications; it plays a significant role in education and awareness. By providing an accessible platform for identifying birds, the app fosters greater awareness of avian diversity and the importance of conservation efforts. It encourages users to engage with local ecosystems, understand their ecological significance, and recognize the threats facing various species due to habitat loss, climate change, and other anthropogenic factors.

Moreover, BirdNet emphasizes the importance of sound in identifying and understanding wildlife. Many people are accustomed to identifying birds by sight, but sound offers an often-overlooked dimension of avian behavior. By focusing on audio recognition, BirdNet highlights the richness of bird communication and encourages users to appreciate the subtleties of nature that may go unnoticed.

In conclusion, BirdNet represents a significant step forward in the intersection of technology and ornithology. By harnessing the power of machine learning and citizen science, it provides an invaluable tool for bird identification, research, and conservation. As we face increasing environmental challenges, initiatives like BirdNet are vital for fostering a greater understanding of biodiversity and promoting active participation in conservation efforts. Through the collective efforts of scientists and enthusiasts alike, BirdNet is not only enhancing our knowledge of birds but also contributing to the preservation of our natural world for future generations. As more individuals embrace the wonders of birdwatching and sound identification, we can only look forward to the possibilities that lie ahead in the quest for avian conservation.

-

Shipping Plastic Bags for Every NeedNewsJul.24,2025

-

Safety Netting: Your Shield in ConstructionNewsJul.24,2025

-

Plastic Mesh Netting for Everyday UseNewsJul.24,2025

-

Nylon Netting for Every UseNewsJul.24,2025

-

Mesh Breeder Box for Fish TanksNewsJul.24,2025

-

Expanded Steel Mesh Offers Durable VersatilityNewsJul.24,2025